Facial recognition

Cardiff resident launches first UK legal challenge to police use of facial recognition technology in public spaces

Posted on 13 Jun 2018

A Cardiff resident has today launched the first legal challenge to a UK police force’s use of automated facial recognition (AFR) technology. He believes he was scanned by South Wales Police at an anti-arms protest and while doing his Christmas shopping.

Ed Bridges – represented by human rights organisation Liberty – has written to Chief Constable Matt Jukes demanding South Wales Police immediately ends its ongoing use of AFR technology because it violates the privacy rights of everyone within range of the cameras, has a chilling effect on protest, discriminates against women and BAME people, and breaches data protection laws.

Ed will take South Wales Police to court if they refuse. He is calling on the public to back his challenge via crowdfunding site CrowdJustice, to make sure he is able to challenge this intrusive policing practice, which has been rolled out in a secretive process with no public participation or debate.

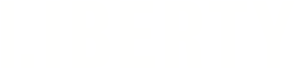

South Wales Police has used facial recognition in public spaces on at least 20 occasions since May 2017. Surveillance cameras equipped with AFR software scan the faces of passers-by, making unique biometric maps of their faces. These maps are then compared to and matched with other facial images on bespoke police databases.

On one occasion – at the 2017 Champions League final in Cardiff – the technology was later found to have wrongly identified more than 2,200 people as possible criminals.

Ed’s face has likely been mapped and his image stored at least twice. He believes he was scanned as a passer-by on a busy shopping street in Cardiff in the days before Christmas, and then again while protesting outside the Cardiff Arms Fair in March 2018.

Members of the public scanned by AFR technology have not provided their consent and are often completely unaware it is in use. It is not authorised by any law and the Government has not provided any policies or guidance on it. No independent oversight body regulates its use.

Ed Bridges said: “The police are supposed to protect us, and their presence should make us feel safe – but I know first-hand how intimidating their use of facial recognition technology is. Indiscriminately scanning everyone going about their daily business makes our privacy rights meaningless. The inevitable conclusion is that people will change their behaviour or feel scared to protest or express themselves freely – in short, we’ll be less free.

“The police have used this intrusive technology throughout Cardiff with no warning, no explanation of how it works and no opportunity for us to consent. They’ve used it on protesters and on shoppers. This sort of dystopian policing has no place in our city or any other.”

Corey Stoughton, Liberty’s Advocacy Director, said: “Police’s creeping rollout of facial recognition into our streets and public spaces is a poisonous cocktail – it shows a disregard for democratic scrutiny, an indifference to discrimination and a rejection of the public’s fundamental rights to privacy and free expression.

“Scanning thousands of our faces and comparing them to shady databases with wildly inaccurate results has seriously chilling implications for our freedom and puts everyone in a continuous police line-up that carries a huge risk of injustice.”

South Wales Police and facial recognition

AFR technology scans the faces of all passers-by in real-time. The software measures their biometric facial characteristics, creating unique facial maps in the form of numerical codes. These codes are then compared to those of other images on bespoke databases.

Three UK police forces have used AFR technology in public spaces since June 2015 – South Wales, the Metropolitan Police and Leicestershire Police. South Wales has been at the forefront of its deployment, using the technology in public spaces at least 20 times.

South Wales Police has admitted it has used AFR technology to target petty criminals, such as ticket touts and pickpockets outside football matches, but they have also used it on protesters.

On 27 March 2018, the police used AFR technology at a protest outside the Defence, Procurement, Research, Technology and Exportability Exhibition – the ‘Cardiff Arms Fair’. Ed attended the protest and he believes he, like many others there, was scanned by the AFR camera opposite the fair’s main entrance.

Protestors were not aware that facial recognition would be deployed and the police did not provide any information at the time of the event.

Freedom of Information requests have revealed South Wales Police’s use of AFR technology has resulted in ‘true matches’ with less than nine per cent accuracy – 91 per cent of ‘matches’ were misidentifications of innocent members of the public.

South Wales Police has wrongly identified 2,451 people, 31 of whom were asked to confirm their identities. Only 15 arrests have been linked to the use of AFR.

Images of all passers-by, whether or not they are true matches, are stored by the force for 31 days – potentially without their knowledge.

A letter to the Chief Constable of South Wales Police, sent by Liberty on behalf of Ed Bridges, threatens legal action if the force does not stop using AFR technology, because it:

- Violates the general public’s right to privacy by indiscriminately scanning, mapping and checking the identity of every person within the camera’s range, capturing personal biometric data without consent – and can lead to innocent people being stopped and questioned by police.

- Interferes with freedom of expression and protest rights, having a chilling effect on people’s attendance of public events and protests. The presence of a police AFR van can be highly intimidating and affect people’s behaviour by sending the message that they are being watched and can be identified, tracked, and marked for further police action.

- Discriminates against women and BAME people. Studies have shown AFR technology disproportionately misidentifies female and non-white faces, meaning they are more likely to be wrongly stopped and questioned by police and to have their images retained.

- Breaches data protection laws. The processing of personal data cannot be lawful because there is no law providing any detailed regulation of AFR use. The vast majority of personal data processed by the technology is also irrelevant to law enforcement – belonging to innocent members of the public going about their business – and so the practice is excessive and unnecessary.

Ed has given the police 14 days to respond or he will start legal proceedings. The force is due to deploy AFR technology again at a Rolling Stones concert on 15 June.

Contact the Liberty press office on 020 7378 3656 / 07973 831 128 or pressoffice@libertyhumanrights.org.uk

I'm looking for advice on this

Did you know Liberty offers free human rights legal advice?

What are my rights on this?

Find out more about your rights and how the Human Rights Act protects them

Did you find this content useful?

Help us make our content even better by letting us know whether you found this page useful or not